As we come closer to closing out the next decade of the 21st century, it is easy to look back on how far technology has come over the last ten years. We all gained online streaming services like Netflix and Hulu, tablets and more powerful mobile devices, and even the first Keurig. Rather than continuing to reminisce in how far we have come with technological firsts and advancements, however, it is exciting to look to where we are headed in 2019.

Out of all of the technological trends in-store for 2019, one of the most obvious and anticipated is additional use cases and improvements around autonomous technology. While autonomy is something that is becoming ingrained in multiple facets of daily life for consumers, self-driving vehicles are the shiny objects that people keep turning their attention toward. When we see a car traveling down the road with no driver in the driver seat, some people may be alarmed, while others may be amazed.

Based out of Palo Alto, California, camera technology company Light is looking to further change the game when it comes to imaging in self-driving cars. Having already introduced a 16-lens computational imaging camera for consumers, the company now plans to incorporate its technology into other use-cases. In order to provide a suite of B2B solutions, Light is looking to improve the technology that is already making its way into the market.

Google announced its self-driving car project back in 2008 and have since logged millions of miles in testing. While real-world applications of the technology are becoming more common through the likes of Tesla and ride-sharing company Uber, we are nowhere near close to having a proven and safe means of a self-driving vehicle. Back as recently as May of this year, Uber made an announcement regarding the March 18th death of a pedestrian. Uber stated that death was caused by the car’s sensors mistakenly identifying the pedestrian as a false positive and failed to swerve in time to avoid her.

Light’s advanced vision technology is specifically tailored for autonomous vehicles and robots, as opposed to repurposing other imaging technology for the application and possibly having issues with environment recognition. Its lens array and software-defined camera system improve with every image taken, allowing machines to navigate three-dimensional space more efficiently. Current prototypes integrate the technology into the back of the vehicle’s rear-view mirror.

Overall, technology in this arena is moving away from individual sensors placed all over a vehicle in order for it to “see” its surroundings. Instead, sensors are being supplemented with imaging platforms containing powerful software and algorithms that stitch multiple images together into a single high-quality and highly-accurate image. This is essentially the same process the human brain undergoes to interpret what our eyes are capturing. The brain translates what our eyes see into “pictures” we can easily understand, including our position and orientation within our surrounding environment.

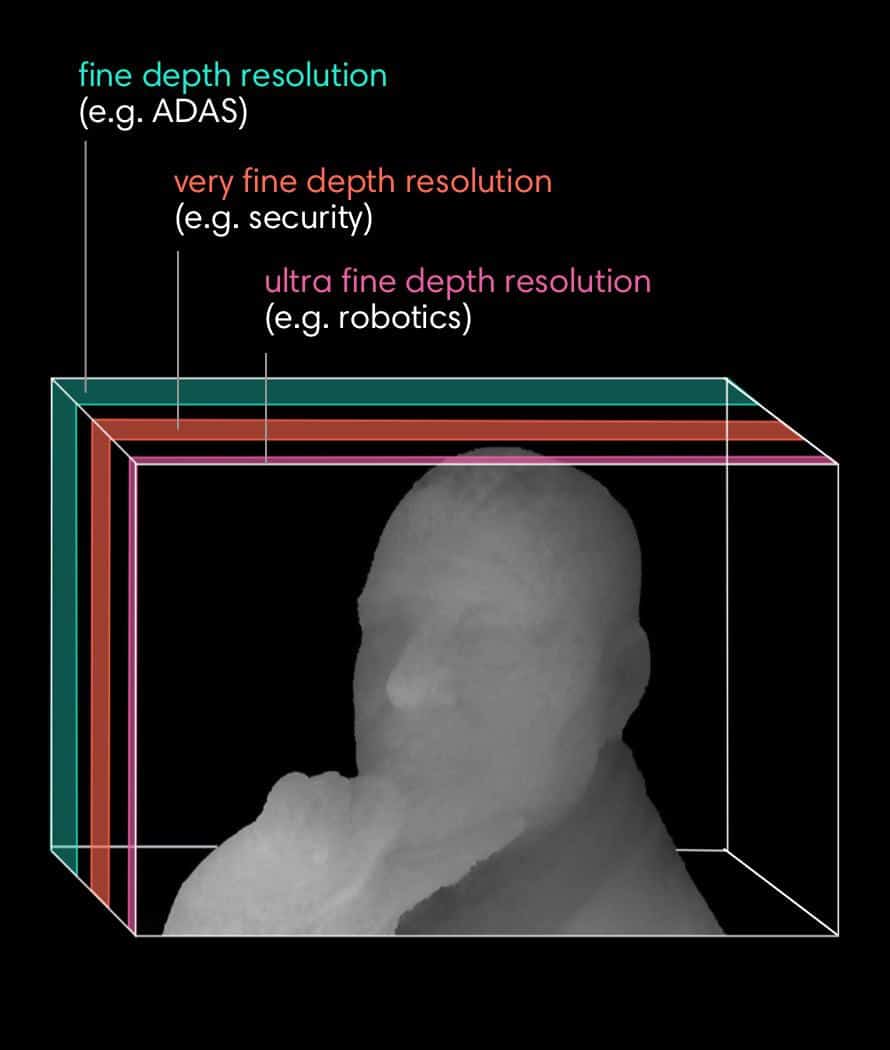

Depth resolution is an integral component of imaging systems being powerful enough for real-world safety when it comes to self-driving vehicles. Imaging systems traditionally provide fine, very fine, or ultra-fine depth resolution based on the requirements of the application to be effective. For example, fine depth resolution is used in several advanced driver-assistance systems like forwarding collision warnings. However, ultra-fine depth resolution is required for robotics used in manufacturing and assembly, medicine, or laboratory research.

As real-world applications are requiring increased accuracy, and safety in the case of self-driving vehicles, these systems are becoming increasingly advanced. With the imaging systems getting better, artificial intelligence and software algorithms need to follow suit as well.

One current method for teaching artificial intelligence about the world and various environments is quite surprising to many. Ultra-realistic, high-definition video games are being deployed to help computers become more intelligent. The game that is paving the way: Grand Theft Auto 5. The game that was originally made popular by its open-world gameplay and fast cars is now lending its realistic, interactive environments and landscapes, as well as its driver AI to several different research groups who are working to enhance machine learning capabilities.

With environments getting exponentially more realistic with the various iterations of each of the top-selling games, the data generated from games like Grand Theft Auto 5 is just as good as the data that would be generated by the world around us.

NIO, a San Jose-based electric car startup is one such company currently utilizing Grand Theft Auto 5 in its driverless car program. According to Davide Bacchet, previous director of NIO’s Autonomous Driving and Simulation, “just relying on data from the roads is not practical… [Grand Theft Auto 5 provides] the richest virtual environment that we could extract data from.” The NIO team has plans to make self-driving cars road-ready by 2020.

Researchers from Intel Labs and Darmstadt University in Germany are also utilizing Grand Theft Auto 5. Their teams created a layer of software that sits between the Grand Theft Auto 5 game and computer hardware. This feature allows them to automatically classify and organize objects in various environments like bustling city streets and winding country roads.

Even though this is one way that artificial intelligence is the future of automation, there are endless ways that technology will impact our day-to-day. Looking forward to 2019, not only are the real-world implications promising when it comes to business and jobs, but there are multiple implications for our personal lives. There will be increasing instances of personal AI assistants, news and media tailored specifically for us as individuals, and technology out on the roads with us in the form of self-driving vehicles.

While the notion of self-driving vehicles may be alarming given the current state of affairs and rocky history around the technology, the industry is ever-changing, and improvements are continuous. Research teams all around the world and hundreds of millions of dollars are continuously working to improve the effectiveness and safety of the technology.

Author Bio:

Amanda Powell is an avid lover of all things technology and innovation who resides in the San Francisco Bay Area. When she’s not busy taking photo’s, she’s reading up on the latest technology trends